NeuralByte's weekly AI rundown - 4th February

New model leaked model from Mistral reaching to GTP-4 level, Google's new image generator and many, many more!

Greetings fellow AI enthusiasts!

This week in we have many news from Google and open source scene. There is also first human with Neuralink brain chip. Learn more in today’s rundown! Hope you’ll like it.

🧠 Stay curious!

Dear subscribers,

Thanks for reading my newsletter and supporting my work. I have more AI content to share with you soon. Everything is free for now, but if you like my work, please consider becoming a paid subscriber. This will help me create more and better content for you.

Now, let's dive into the AI rundown to keep you in the loop on the latest happenings:

🖼️ Google’s ImageFX: A New AI Image Generator

🕵️ Mistral Medium Leak Confirmed: What You Need to Know About Miqu 70b

🧠 Elon Musk’s Neuralink claims to have implanted its first brain chip in a human

💼 Microsoft shows how AI is shaping future of work

🧑💻 Meta’s Code Llama 70B: A free AI tool rivaling GPT-4 in advanced coding

🗺️ Google Maps is using generative AI to chat with users and suggest places

☁️ Google Cloud teams up with Hugging Face to boost AI development

☣️ OpenAI explores how good GPT-4 is at creating bioweapons

🤖 Tencent Surveys MultiModal Large Language Models for Diverse Tasks

🛍️ Amazon debuts ‘Rufus’, an AI shopping assistant in its mobile app

📝 Weaver: A New Family of Large Language Models for Creative Writing

And more!

Google’s ImageFX: A New AI Image Generator

Google has been a leader in the AI race, developing various tools and products powered by artificial intelligence. However, the company was slow to enter the AI image generator space, keeping its Imagen model private until recently. On Thursday, Google announced a series of AI updates, with a focus on image generation.

The biggest highlight was ImageFX, a new image-generation tool that users can access in Google Labs, the company’s experimental platform. ImageFX allows users to generate images from text the same way they would with any other AI model, such as DALL-E 3. However, ImageFX has a unique feature: expressive chips, which enable users to experiment with different dimensions of their creation and ideas, such as style, mood, color, and more.

The details:

ImageFX is powered by Imagen 2, Google’s most advanced text-to-image model, developed by Google DeepMind and released last month. Imagen 2 can generate high-quality images, even rendering challenging tasks such as human faces and hands realistically.

Imagen 2 will also be integrated into Google Bard, the company’s AI chatbot, giving it the ability to generate images for the first time. Users can simply ask Bard to generate a photo using a conversational prompt, and Bard will respond with an image.

Google is also infusing Imagen 2 across its offerings, including Ads, Duet AI in Workspace, SGE, and Google Cloud’s Vertex AI.

To address concerns regarding the misuse of AI image generators, Google has implemented guardrails to prevent generating violent, offensive, and sexually explicit content. Additionally, all images generated with Imagen 2 will be watermarked with SynthID, a tool developed by Google DeepMind that can identify AI-generated images.

Why it’s important:

AI image generation is a rapidly evolving field that has many potential applications and implications. Google’s entry into this space is a significant move that showcases the company’s innovation and ambition. By making Imagen 2 available to the public, Google is opening up new possibilities for creativity, communication, and education. However, Google is also aware of the risks and challenges of AI image generation, such as misinformation, manipulation, and ethical issues. Therefore, Google is taking steps to ensure responsible AI use, such as watermarking, metadata, and guardrails. Google’s AI image generator updates are a testament to the power and complexity of AI, and the need for balance and accountability.

Mistral Medium Leak Confirmed: What You Need to Know About Miqu 70b

The AI community was in for a surprise when a new language model called Miqu 70b appeared on HuggingFace, a popular open-source AI platform. The model, which showed impressive performance and potential, was later confirmed to be a quantized version of an older model from Mistral, a Paris-based AI company. The leak revealed Mistral’s progress and ambition in the field of AI, as well as some interesting details about Miqu 70b.

The details:

Miqu 70b was uploaded by a user named “Miqu Dev” on HuggingFace, sparking curiosity and speculation about its origin and capabilities.

The model was analyzed by the AI community across various platforms, such as X, LinkedIn, and 4chan, with some comparing it to GPT-4, the latest and most advanced language model from OpenAI.

Arthur Mensch, the CEO of Mistral, confirmed that Miqu 70b was a quantized and watermarked version of an old Mistral model, accidentally leaked by an employee of an early access customer.

Mistral is a leading AI company in Paris, founded by ex-Meta and Google employees, that develops open-source AI technologies, such as Mistral-7b and Mixtral 8x7B, using sparse mixture-of-experts models.

Miqu 70b is a retrained version of Meta’s Megatron-LM, a large-scale language model that uses model parallelism to scale up to billions of parameters.

Miqu 70b is a 70 billion parameter model, which is comparable to GPT-4’s 72 billion parameter model, but uses less memory and computational resources due to quantization, a technique that reduces the precision of the model’s weights.

Miqu 70b can generate fluent and coherent texts on various topics and domains, such as news, fiction, poetry, code, and more, as well as answer questions and perform tasks, such as solving puzzles and translating languages.

Why it’s important:

The leak of Miqu 70b is a significant event for the open-source AI landscape, as it showcases the potential and innovation of Mistral, a relatively new and lesser-known player in the field. It also shows the potential of open source models to compete with industry giants like Microsoft, Google, or OpenAI.

Elon Musk’s Neuralink claims to have implanted its first brain chip in a human

The details:

The Neuralink chip, called Telepathy, is powered by a battery that can be charged wirelessly and can record and transmit brain signals to an app that decodes how the person intends to move.

The chip is implanted by a robot that places 64 flexible threads, thinner than a human hair, on a part of the brain that controls “movement intention”.

The first human recipient of the chip is a person with quadriplegia, or paralysis of all four limbs, who is participating in a six-year study to evaluate the safety and functionality of the implant.

Neuralink is not the only company working on brain-computer interfaces, as it faces competition from other startups and research institutions that have already implanted similar devices in humans and animals.

Neuralink has also faced some challenges, such as being fined for violating US Department of Transportation rules regarding the movement of hazardous materials and having some of its co-founders leave the company.

Why it’s important:

Neuralink’s breakthrough could have a huge impact on the lives of millions of people who suffer from various forms of paralysis, as well as other neurological disorders such as Alzheimer’s, Parkinson’s, epilepsy, and depression. By enabling direct communication between the brain and machines, Neuralink could potentially restore mobility, speech, memory, and mood to those who have lost them. Moreover, Neuralink could also open up new possibilities for human enhancement, such as augmenting cognitive abilities, accessing digital information, and experiencing virtual reality. However, Neuralink also raises ethical, social, and legal questions, such as the risks of hacking, privacy, consent, and regulation. Neuralink is one of the most ambitious and controversial projects in the field of AI, and its future implications are both exciting and uncertain.

Microsoft shows how AI is shaping the future of work

Microsoft has released its third annual New Future of Work Report, which summarizes the latest research findings and insights on how AI and hybrid work are transforming the workplace and society. The report covers topics such as productivity, collaboration, well-being, accessibility, and inclusion.

The details:

The report highlights the benefits and challenges of hybrid work, which combines remote and in-person work modes.

The report showcases how Microsoft is using AI to enhance its own hybrid work practices and products, such as Teams, Outlook, and Copilot.

The report also explores the ethical and social implications of AI and hybrid work, such as privacy, security, fairness, and accountability. The report emphasizes the need for human-centered design, responsible innovation, and stakeholder engagement to ensure that AI and hybrid work are aligned with human values and goals.

The report draws on the expertise and experience of over 100 researchers and practitioners from Microsoft Research, Microsoft 365, and other divisions, as well as external collaborators from academia and industry.

Why it’s important:

AI and hybrid work are reshaping the way we work and live in the 21st century. They offer new opportunities and challenges for individuals, organizations, and society. Microsoft’s New Future of Work Report provides a comprehensive and timely overview of the current state and future directions of these trends, as well as practical guidance and recommendations for navigating them successfully.

Meta’s Code Llama 70B: A free AI tool rivaling GPT-4 in advanced coding

Meta, a company that develops open-source large language models (LLMs), has released Code Llama 70B, a state-of-the-art AI tool that can generate code from natural language prompts. Code Llama 70B is the largest and best-performing model in the Code Llama family, with 70 billion parameters and trained on 1 trillion tokens of code and code-related data. Code Llama 70B is available for free for research and commercial use.

The details:

Code Llama 70B can generate code, and natural language about code, from both code and natural language prompts (e.g., "Write me a function that outputs the Fibonacci sequence.")

It supports many of the most popular languages being used today, including Python, C++, Java, PHP, Typescript (Javascript), C#, and Bash.

The model outperforms other publicly available LLMs on code tasks, such as code completion, debugging, and documentation.

Meta’s Code Llama 70B can handle up to 100,000 tokens of context, allowing it to generate longer and more relevant programs.

It is built on top of Llama 2, Meta's open-source LLM that can handle a variety of natural language tasks, such as reasoning, proficiency, and knowledge tests.

Why it’s important:

Code Llama 70B is a powerful and versatile AI tool that can make coding faster and easier for developers of all skill levels. It can also lower the barrier to entry for people who are learning to code, by providing guidance and feedback. Code Llama 70B is part of Meta’s vision to create open and responsible AI that can benefit society.

Google Maps is using generative AI to chat with users and suggest places

Google Maps wants to help users discover new places and experiences that match their interests and preferences. To do that, Google is using generative AI, a type of artificial intelligence that can create new content from existing data. Google Maps will use its large-language models (LLM), which can generate text and suggestions from over 250 million saved places and reviews from over 300 million contributors.

Users will be able to ask Google Maps what they are looking for, such as “places with a vintage vibe in SF”. The app will then analyze the information about nearby businesses and places, along with photos, ratings, and reviews, and give users trustworthy suggestions. The results will be organized into categories, such as clothing stores, vinyl shops, and flea markets. Users can also ask follow-up questions, such as “How about lunch?”, and get more recommendations that fit their criteria.

The details:

The generative AI feature in Google Maps is launching this week as an early access experiment to select Local Guides, with plans to roll out the feature generally over time.

The feature uses neural radiance fields (NeRF), an advanced AI technique, to create 3D images out of ordinary pictures. This gives the user an idea of a place’s lighting, the texture of materials, or pieces of context, such as what’s in the background.

The model is similar to ChatGPT, a generative AI tool that lets users chat with various personalities and topics. ChatGPT uses a large-scale neural network that can generate coherent and engaging conversations.

The feature is part of Google’s broader efforts to use generative AI in its products, such as Google Photos, Google Lens, and Google Translate. Google says that generative AI can help users explore and better understand the world.

Why it’s important:

Generative AI is one of the most promising and exciting fields of AI research. It has the potential to create new forms of art, entertainment, education, and communication. It can also help users find relevant and useful information, products, and services.

Google Maps is using generative AI to enhance its user experience and offer more value to its users. By letting users chat with the app and get more nuanced responses and suggestions, Google Maps is becoming more than just a navigation tool. It is becoming a personal assistant and a travel guide.

Google Cloud teams up with Hugging Face to boost AI development

Google Cloud, the cloud computing division of Alphabet Inc, announced on Thursday that it had formed a strategic partnership with Hugging Face, a startup that provides open source AI software and tools. The partnership aims to simplify and accelerate the development of generative AI and machine learning applications in Google Cloud.

Generative AI is a branch of artificial intelligence that can create new content or data, such as text, images, audio, or code, based on existing data or models. Hugging Face is one of the leading providers of generative AI software, such as natural language processing (NLP) models and datasets, that are widely used by researchers and developers.

The partnership will allow Google Cloud customers to easily access and deploy Hugging Face’s repository of open source AI software, such as Transformers, a library of state-of-the-art NLP models and algorithms. Google Cloud will also offer technical support and integration for Hugging Face’s software, as well as advanced hardware options, such as Google’s tensor processing units (TPUs) and Nvidia’s H100 AI chips, to run Hugging Face’s workloads.

The details:

Google Cloud becomes a strategic cloud partner for Hugging Face, and a preferred destination for Hugging Face training and inference workloads.

The partnership advances Hugging Face’s mission to democratize AI and furthers Google Cloud’s support for open source AI ecosystem development.

Google Cloud customers will be able to use Hugging Face’s software to build and fine-tune their own AI models for specific tasks and needs, such as text generation, summarization, translation, sentiment analysis, and more.

Google Cloud will also provide tools and services to help customers ensure the quality, safety, and reliability of their AI models, such as data leakage prevention, model monitoring, and explainability.

Hugging Face’s repository of AI-related software will roughly double in size in the next four months, reflecting the growing demand and interest in generative AI and machine learning.

Why it’s important:

AI is becoming a key driver of innovation and transformation for businesses across various industries and domains. Generative AI, in particular, has the potential to unlock new possibilities and opportunities for creating value and solving problems. By partnering with Hugging Face, Google Cloud is making it easier and faster for customers to leverage the power and potential of generative AI and machine learning, and to stay ahead of the curve in the rapidly evolving AI landscape.

OpenAI explores how good GPT-4 is at creating bioweapons

OpenAI, a leading AI research organization, has conducted an experiment to measure whether a large language model (LLM) like GPT-4 could aid someone in creating a biological threat. The experiment involved 100 human participants, including biology experts and students, who were asked to complete tasks related to different stages of the biological threat creation process. The results showed that GPT-4 provides at most a mild uplift in biological threat creation accuracy, compared to the baseline of existing resources on the internet.

The details:

The experiment was designed to evaluate the risk that a malicious actor could use a highly-capable model like GPT-4 to develop a step-by-step protocol, troubleshoot wet-lab procedures, or even autonomously execute steps of the biothreat creation process.

The participants were randomly assigned to either a control group, which only had access to the internet, or a treatment group, which had access to GPT-4 in addition to the internet. They were then asked to complete a set of tasks covering aspects of the end-to-end process for biological threat creation, such as ideation, acquisition, magnification, formulation, and release.

The study assessed the performance of the participants with access to GPT-4 across five metrics: accuracy, completeness, innovation, time taken, and self-rated difficulty. The study also measured the participants’ perceptions of GPT-4’s usefulness and trustworthiness.

The study found that GPT-4 provides at most a mild uplift in biological threat creation accuracy, which is not large enough to be conclusive. The study also found that GPT-4 does not significantly improve the completeness, innovation, or time taken of the participants’ tasks. The participants rated the tasks as slightly easier with GPT-4, but also expressed mixed views on GPT-4’s usefulness and trustworthiness.

Why it’s important:

This study is one of the first attempts to empirically evaluate the potential misuse of AI for biological threat creation, which is a serious concern for the AI safety community and policymakers. The study provides a blueprint for developing more rigorous and comprehensive evaluations of AI’s impact on biorisk information, as well as a baseline for future studies with more advanced models. The study also highlights the need for continued research and community deliberation on the ethical and social implications of AI-enabled biological threats.

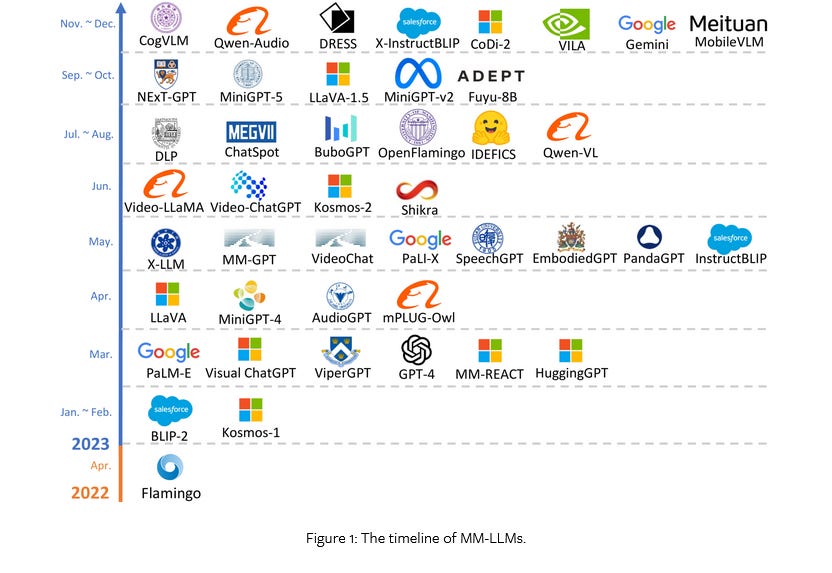

Tencent Surveys MultiModal Large Language Models for Diverse Tasks

MultiModal Large Language Models (MM-LLMs) are a new class of models that combine the power of language models with the ability to process and generate multiple modalities, such as images, videos, audio, and text. MM-LLMs have shown impressive results on various tasks, such as visual question answering, image captioning, video dialogue, and more. In this paper, the authors provide a comprehensive overview of the design, training, and evaluation of MM-LLMs, as well as the challenges and opportunities for future research.

The details:

The paper outlines the general model architecture of MM-LLMs, consisting of five components: Modality Encoder, Input Projector, LLM Backbone, Output Projector, and Modality Generator.

The study reviews 26 state-of-the-art MM-LLMs, each characterized by its specific formulations and modalities. The paper also summarizes the development trends and key training recipes of MM-LLMs.

It compares the performance of MM-LLMs on 18 mainstream benchmarks, covering different modalities and tasks. The paper also discusses the limitations and biases of existing benchmarks and datasets.

The paper explores promising directions for MM-LLMs, such as any-to-any modality conversion, instruction following, human feedback, and ethical and social implications. The paper also maintains a real-time tracking website for the latest progress in the field.

Why it’s important:

MM-LLMs are a novel and exciting research area that aims to bridge the gap between natural and artificial intelligence. By leveraging the rich and diverse information from multiple modalities, MM-LLMs can enhance the understanding, reasoning, and generation capabilities of language models, and enable a wide range of applications and interactions. This paper provides a valuable and timely survey of the current state and future prospects of MM-LLMs, and serves as a useful reference for researchers and practitioners in the field.

Amazon debuts ‘Rufus’, an AI shopping assistant in its mobile app

Amazon has launched Rufus, an AI-powered shopping assistant that can chat with customers in its mobile app and help them find, compare, and buy products. Rufus is trained on Amazon’s product catalog and other web sources, and can suggest questions and recommendations based on the conversation context. Rufus is currently in beta for some U.S. users, and Amazon plans to improve and expand it in the future. Rufus is part of Amazon’s efforts to use AI to enhance the online shopping experience and gain a competitive edge.

Weaver: A New Family of Large Language Models for Creative Writing

Weaver is a new family of large language models (LLMs) for content creation, pre-trained on a corpus that improves their writing capabilities. Weaver has four models of different sizes, fine-tuned for different writing scenarios and aligned to professional writers’ preferences. Weaver supports retrieval-augmented generation and function calling, allowing it to integrate external knowledge and tools. Weaver outperforms generalist LLMs, such as GPT-4, on a benchmark for writing capabilities. Weaver can assist and inspire writers with various creative and professional writing tasks.

Quick news

Finalframe will launch version 2.0 with video editing features (link)

AInfiniteTV is the first YouTube channel streaming AI generative content 24/7. (link)

iOS 17.4, Apple Podcasts will introduce the capability to display auto-generated transcripts. (link)

Shopify debuts AI-powered image editor (link)

Amazon’s new AI helps enhance virtual try-on experiences (link)

OpenAI introduces GPT mentions (link)

OpenAI reveals new models, drop prices, and fixes ‘lazy’ GPT-4 (link)

For daily news from the AI and Tech world follow me on:

Be better with AI

In this section, we will provide you with comprehensive tutorials, practical tips, ingenious tricks, and insightful strategies for effectively employing a diverse range of AI tools.

How to use Niji model for free:

Social media were recently full of images from Midjourney’s Niji model which makes beautiful anime illustration.

But Remix AI, free AI generation app for iOS and Android launched 5 model including Niji.

Juggernaut XL

Dynavision XL SDXL

Niji Special Edition

LEOSAM's HelloWorld

Animagine XL

You can try them right now at https://remix.ai/

Thanks to TechHalla for showing me this.

Tools

🤖 CodeGTP in VS studio - Excellent extension for VS studios (link)

😮💨 WhisperKit - Transcription and translation app for iPhone and Mac (link)

📸 LensGo.AI - LensGo.AI lets change the style of the video. (link)

🕵️ MultiOn - World's first AI personal agent. (link)

🧑💻Wanderer - Explore your career paths. (link)

We hope you enjoy this newsletter!

Please feel free to share it with your friends and colleagues and follow me on socials.