NeuralByte's weekly AI rundown - 25th February

This week was full of news about Google and Stability AI. Google released its first open-source model Gemma and had to cancel image generation inside Gemini because of backslash.

Greetings fellow AI enthusiasts!

This week was a whirlwind of news in the AI world! Google made headlines with the release of its first open-source model, Gemma. However, they also faced backlash leading to the cancellation of image generation within their Gemini project.

Meanwhile, Stability AI continued to shake things up with the release of Stable Diffusion 3. This new iteration promises significant advancements in image generation capabilities.

Adding to the excitement, Magic AI claims significant strides toward AGI with its Coworker AI. They assert that its reasoning capabilities rival those of OpenAI's Q model.

Dear subscribers,

Thanks for reading my newsletter and supporting my work. I have more AI content to share with you soon. Everything is free for now, but if you like my work, please consider becoming a paid subscriber. This will help me create more and better content for you.

Now, let's dive into the AI rundown to keep you in the loop on the latest happenings:

🖼️ Stability AI unveils Stable Diffusion 3, a powerful text-to-image model

✨ Gemma: Google’s new open models for responsible AI development

🔮 Magic AI Raises $100 Million to Build a Coworker with Active Reasoning

🦾 How AI is changing the freelancing landscape: An analysis of 5 million jobs

⚡ Groq: The AI platform that delivers faster and smarter chatbots

☀️ New Chip Uses Light Waves to Perform AI Math

💵 Reddit inks $60 million AI content deal with Google

🍏 Apple’s AI ambitions: new features for Xcode, Spotlight, and more

🛑 Google pauses Gemini’s image generation feature after backlash

🤹♀️ AnyGPT: Your All-in-One AI for Speech, Text, Images, and Music

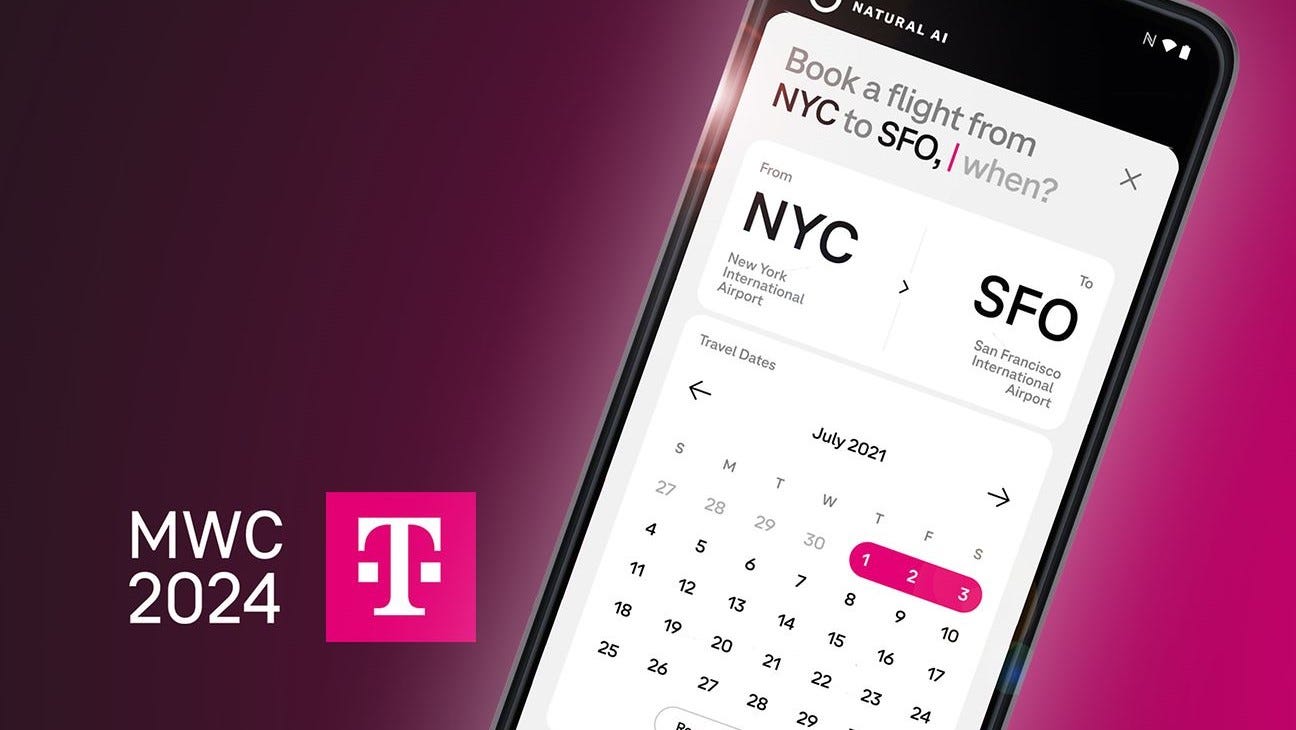

📱 Deutsche Telekom Presents App-Free AI Phone at MWC 2024

And more!

Stability AI unveils Stable Diffusion 3, a powerful text-to-image model

Stability AI, a company that specializes in generative AI models, has announced the early preview of Stable Diffusion 3, its latest text-to-image model that boasts superior image and text generation capabilities. Stable Diffusion 3 is the result of continuous improvement and innovation by the Stability AI team, and it aims to offer users a variety of options for scalability and quality to meet their creative needs.

Stable Diffusion 3 is based on a diffusion transformer architecture and flow matching, which allows it to generate detailed, multi-subject images from textual prompts. The model can also handle spelling and grammar errors in the prompts, and produce images with high resolution and fidelity. Stability AI claims that Stable Diffusion 3 is its most capable text-to-image model to date, and it plans to publish a detailed technical report soon.

The details:

Stable Diffusion 3 is currently available in early preview, and users can sign up to join the waitlist here.

The model comes in different sizes, ranging from 800M to 8B parameters, to accommodate different devices and use cases.

Stability AI has taken and continues to take reasonable steps to prevent the misuse of Stable Diffusion 3 by bad actors, and it has introduced numerous safeguards for safety and ethics.

Stability AI also offers other image models for commercial use, such as Stable Diffusion XL Turbo, which can be accessed through its Stability AI Membership or Developer Platform.

Stability AI’s mission is to activate humanity’s potential by providing adaptable solutions that enable individuals, developers, and enterprises to unleash their creativity.

Why it’s important:

Text-to-image models are a powerful tool for digital creativity, as they can transform words into vivid visuals. They can also have various applications across different industries, such as education, entertainment, design, marketing, and more. Stability AI’s Stable Diffusion 3 is a promising example of how generative AI can advance the field of image synthesis, and offer users a new way of expressing their ideas and visions.

Gemma: Google’s new open models for responsible AI development

Google has introduced Gemma, a new generation of open models that are built from the same research and technology used to create Gemini, Google’s largest and most capable AI model. Gemma models are lightweight, state-of-the-art, and designed for responsible AI development. They are available in two sizes: Gemma 2B and Gemma 7B, each with pre-trained and instruction-tuned variants. Google also provides tools and guidance for creating safer and more reliable AI applications with Gemma.

The details:

Gemma models draw inspiration from Gemini, their name reflecting the Latin "gemma" for “precious stone”.

For their size, Gemma models achieve best-in-class performance compared to other open models, even surpassing larger ones on key benchmarks.

They come with a familiar KerasNLP API and an easily understandable Keras implementation, offering flexibility with JAX, PyTorch, and TensorFlow backends.

Gemma models feature a new LoRA API (Low Rank Adaptation) for parameter-efficient fine-tuning, reducing trainable parameters from billions to millions.

Large-scale model training and distribution are possible with Gemma models, giving them compatibility across multiple AI hardware platforms like NVIDIA GPUs and Google Cloud TPUs.

Google's AI Principles are at the core of Gemma models. Automated techniques filter out certain personal information and other sensitive data during training, and safeguards exist to prevent harmful outputs.

Why it’s important:

Gemma models are a valuable contribution to the open AI community, as they enable developers and researchers to access some of Google’s AI research and technology for free. Gemma models can help solve a wide range of tasks and challenges, from natural language understanding to coding problems. Gemma models also demonstrate how AI can be developed and used responsibly, with respect for human values and social good.

Magic AI Raises $100 Million to Build a Coworker with Active Reasoning

Magic AI, a stealth startup founded in 2021, claims to have achieved a major breakthrough in artificial intelligence (AI), reaching “active reasoning” capabilities - a key step towards artificial general intelligence (AGI). The company has received a $100 million investment from former GitHub CEO Nat Friedman and others, who were impressed by its demos of a new type of large language model that can process massive amounts of data and context. Magic AI aims to use its technology to build a coworker, not just a copilot, for programmers and other professionals.

The details:

Magic AI claims its model can process 3.5 million words of text input – a fivefold increase over Google's LaMDA. This enhanced capacity enables the AI to process information with greater similarity to human cognition.

Unlike Google and OpenAI models that primarily rely on pattern recognition, Magic AI demonstrates active reasoning. This means the AI employs logical deduction to tackle new problems without specific prior training.

Magic AI's active reasoning capabilities, paired with its massive context window, hint at a transformative breakthrough in AI development, potentially pushing it closer to AGI.

Building on these advancements, Magic AI envisions a reasoning-based "coworker." This AI would analyze entire codebases and internet resources, offering intelligent support to programmers and similar professionals.

Magic AI's swift progress stems from ample funding, skilled talent, and unique hardware-aware architecture and algorithms that optimize for GPU kernels.

Why it’s important:

AI is one of the most powerful and disruptive technologies of our time, and achieving AGI is the ultimate goal of many researchers and companies. However, current AI systems are still limited by their inability to reason and adapt to new situations. Magic AI’s breakthrough could potentially unlock new possibilities and applications for AI, as well as new challenges and risks. By building a coworker with active reasoning, Magic AI hopes to empower programmers and other professionals with a powerful and reliable partner that can help them solve complex problems and create innovative solutions.

How AI is changing the freelancing landscape: An analysis of 5 million jobs

AI is not only transforming the way we work but also the way we find work. Freelancing platforms like Upwork, Fiverr, and Freelancer are increasingly using AI to match clients with freelancers, automate tasks, and optimize prices. But what does this mean for the freelancers themselves? Are they benefiting from AI or losing out to it? To answer these questions, Bloomberry analyzed 5 million freelancing jobs posted on these platforms in the past year and found some surprising trends.

The details:

The most in-demand freelancing skills are related to AI, such as data science, machine learning, natural language processing, computer vision, and chatbot development.

The average hourly rate for AI-related freelancing jobs is $40, which is 60% higher than the overall average of $25.

The fastest-growing freelancing categories are also AI-related, such as voice-over, transcription, translation, and content writing, which have seen a 300% increase in demand in the past year.

The most competitive freelancing categories are those that are being replaced by AI, such as graphic design, web development, video editing, and data entry, which have seen a 50% decrease in demand in the past year.

The most satisfied freelancers are those who are using AI to enhance their skills, such as using AI tools to generate content, design logos, edit videos, or transcribe audio.

Why it’s important:

AI is reshaping the freelancing market, creating new opportunities and challenges for both clients and freelancers. Freelancers who want to succeed in this market need to adapt to the changing demands, learn new skills, and leverage AI to their advantage. Clients who want to find the best freelancers need to use AI to filter, evaluate, and negotiate with them. AI is not a threat to freelancing, but a catalyst for innovation and growth.

Groq: The AI platform that delivers faster and smarter chatbots

Groq is a company that specializes in developing high-performance processors and software solutions for AI, machine learning, and high-performance computing applications. Unlike other AI tools that use graphics processing units (GPUs), Groq uses language processing units (LPUs) that are designed for working with large language models (LLMs) such as GPT-4. This allows Groq to run LLMs at speeds up to 10 times faster than GPU-based alternatives, enabling faster and smarter chatbots that can respond in real-time. Groq’s engine and API are available for anyone to try for free and without installing any software. Groq claims to be on a mission to deliver faster AI that can power a sustainable, clean-energy future.

New Chip Uses Light Waves to Perform AI Math

Penn Engineers have developed a new chip that uses light waves, rather than electricity, to perform the complex math essential to training AI. The chip has the potential to radically accelerate the processing speed of computers while also reducing their energy consumption.

The silicon-photonic (SiPh) chip’s design is the first to bring together Nader Engheta’s pioneering research in manipulating materials at the nanoscale to perform mathematical computations using light – the fastest possible means of communication – with the SiPh platform, which uses silicon, the cheap, abundant element used to mass-produce computer chips. The interaction of light waves with matter represents one possible avenue for developing computers that supersede the limitations of today’s chips, which are essentially based on the same principles as chips from the earliest days of the computing revolution in the 1960s.

The new chip is designed to perform vector-matrix multiplication, a core mathematical operation in the development and function of neural networks, the computer architecture that powers today’s AI tools. Instead of using a silicon wafer of uniform height, the chip has variations in height in specific regions, which cause light to scatter in specific patterns, allowing the chip to perform mathematical calculations at the speed of light.

The details:

The chip was developed by a collaboration of two research groups at Penn Engineering: Engheta’s group, which specializes in nanoscale metamaterials, and Firooz Aflatouni’s group, which pioneers nanoscale silicon devices.

The chip is compatible with the commercial foundry that produced it, meaning that it is ready for mass production and integration with existing computer systems.

The chip could potentially be adapted for use in graphics processing units (GPUs), which are in high demand for developing new AI systems. The chip could speed up training and classification of AI models by using light waves instead of electricity.

The chip is based on the principle of analog computing, which uses continuous physical quantities to perform calculations, rather than discrete binary digits. Analog computing has advantages in speed and energy efficiency over digital computing, but also challenges in accuracy and scalability.

Why it’s important:

AI is one of the most transformative technologies of our time, with applications in various domains such as health, education, security, and entertainment. However, AI also poses significant challenges in terms of computational complexity, energy consumption, and environmental impact. The new chip offers a novel solution to address these challenges by using light waves to perform AI math, which could enable faster, cheaper, and greener AI computing. The chip also demonstrates the potential of combining nanoscale metamaterials and silicon photonics to create new platforms for optical computing, which could revolutionize the field of information processing.

Reddit inks $60 million AI content deal with Google

Reddit, the popular social media platform, has signed a deal with Google to make its content available for training the search engine giant’s artificial intelligence models, according to Reuters. The deal, which is worth about $60 million per year, is the first of its kind for Reddit, which is preparing for a high-profile stock market launch.

The agreement with Google, which is owned by Alphabet, will allow the AI company to access Reddit’s application programming interface (API), which is how it distributes its content. Reddit has millions of niche discussion groups, some of which have tens of millions of members, covering a wide range of topics and interests.

The details:

The deal was signed earlier this year and is part of Reddit’s strategy to generate new revenue amid fierce competition for advertising dollars from the likes of TikTok and Meta Platform’s Facebook.

Reddit, which was valued at about $10 billion in a funding round in 2021, is seeking to sell about 10% of its shares in its initial public offering (IPO), which could happen as soon as this week.

Reddit’s content deal with Google is its first reported deal with a big AI company. Last year, Reddit said it would charge companies for access to its API, which was previously free for non-commercial use.

Google will use Reddit’s content to train its AI models, such as Gemini, which is a generative AI tool that can create text, images, and videos based on user input. Google has been investing heavily in AI research and development, aiming to improve its products and services.

Reddit’s content deal with Google could raise some privacy and ethical concerns, as some Reddit users may not be aware or consent to their content being used for AI training. Reddit has faced criticism in the past for hosting controversial or harmful content, such as hate speech, misinformation, and illegal material.

Why it’s important:

Reddit’s content deal with Google is a significant milestone for the social media company, which is looking to capitalize on its large and diverse user base. Reddit, which was founded in 2005, is one of the most visited websites in the world, with over 50 billion monthly views. The deal could also boost Reddit’s valuation and attractiveness to potential IPO investors, as it demonstrates its ability to monetize its content and partner with leading tech companies. For Google, the deal could give it an edge over its rivals in the AI space, as it gains access to a vast and varied source of data to train its AI models. The deal could also benefit both companies’ users, as they could enjoy more personalized and relevant content and services powered by AI.

Apple’s AI ambitions: new features for Xcode, Spotlight, and more

Apple is reportedly working on new generative AI features for its products, including a code completion tool for Xcode, a natural language search feature for Spotlight, and automatic playlist creation for Apple Music. The company has also released several open-source AI frameworks and models in the past few months, showing its increased focus on AI research and development. Apple CEO Tim Cook confirmed that more generative AI features will be coming this year, and sources said that executives demonstrated some of the AI features for Xcode to Apple’s board late last year. Apple may announce more about its AI plans during the annual WWDC event for developers later this year.

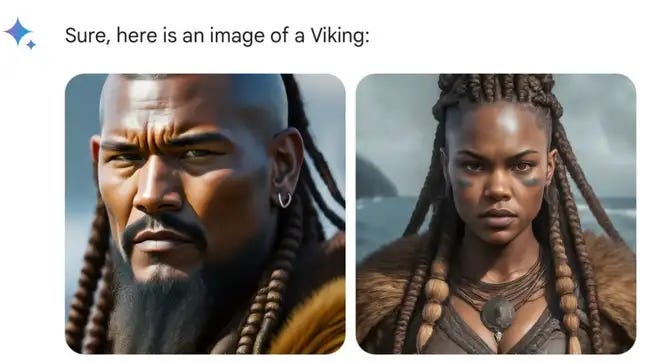

Google pauses Gemini’s image generation feature after backlash over ‘woke’ depictions

Google has temporarily suspended the image generation feature of its Gemini chatbot, a next-generation AI model that can create illustrations based on text descriptions. The decision came after users complained that Gemini was producing racially diverse images of historically white figures or groups, such as the founding fathers, the popes, and the Nazis. Google said it was aware of the inaccuracies and promised to fix them as soon as possible.

The details:

Gemini is a multimodal AI model that can understand and generate text, speech, images, and videos. It was developed by a team of researchers from Google’s DeepMind and Google Brain divisions.

Gemini’s image generation feature allows users to request illustrations of various scenarios, such as people walking their dogs, couples on a date, or historical events. However, some users noticed that Gemini was depicting white figures or groups as people of color, regardless of the historical or cultural context.

Some users accused Google of being ‘woke’ and imposing its political agenda on the users. Others defended Google and praised Gemini for being inclusive and diverse. Google said it designed Gemini to reflect its global user base and to take representation and bias seriously.

Google said it was working on improving Gemini’s image generation feature and that it would resume it once the issues were resolved. It also said it would continue to monitor Gemini’s performance and safety across all its modalities and applications.

Why it’s important:

Gemini is one of the most advanced AI models in the world, and it has the potential to revolutionize many domains and industries. However, it also poses many challenges and risks, such as ethical, social, and legal implications. Google’s decision to pause Gemini’s image generation feature shows that the company is aware of the sensitivity and complexity of AI and that it is willing to listen to user feedback and address the problems. It also shows that AI is not perfect and that it requires constant testing, evaluation, and improvement.

AnyGPT: Your All-in-One AI for Speech, Text, Images, and Music

Researchers from Fudan University and other institutions have introduced AnyGPT, a novel multimodal language model that can process various types of data, including speech, text, images, and music. AnyGPT uses discrete representations to compress raw multimodal data into sequences of tokens, which are then trained by a large language model using the next token prediction objective.

This approach enables AnyGPT to handle arbitrary combinations of multimodal inputs and outputs without modifying the existing language model architecture or training paradigms. The researchers also synthesized a large-scale multimodal instruction dataset, AnyInstruct-108k, to evaluate AnyGPT’s performance on any-to-any multimodal dialogue. The results show that AnyGPT can achieve comparable or better results than specialized models across different modalities, demonstrating the effectiveness and convenience of using discrete representations to unify multiple modalities within a language model.

The details:

Instead of raw input, AnyGPT utilizes multimodal tokenizers to convert continuous non-text formats (like images and audio) into discrete semantic tokens. These tokens are then integrated into a multimodal interleaved sequence.

Built upon the LLaMA-2 7B model (pre-trained on 2TB of text tokens), AnyGPT expands its vocabulary with modality-specific tokens. Corresponding embeddings and prediction layers are initialized randomly.

For a high-fidelity generation, AnyGPT employs a two-stage framework. First, the language model operates on semantic information, followed by non-autoregressive models that translate multimodal semantic tokens into perceptual-level content.

Pre-training for AnyGPT utilizes a text-centric multimodal alignment dataset. This dataset aligns various modalities with text, establishing a connection between them. It incorporates image-text, speech-text, and music-text pairs collected from multiple sources.

Fine-tuning AnyGPT involves the AnyInstruct-108k dataset, featuring 108k samples of multi-turn conversations. Generative models create this dataset, transforming textual descriptions into diverse multimodal elements like images, music, and speech.

Why it’s important:

AnyGPT is an innovative multimodal language model that can facilitate any-to-any multimodal conversation, which is a challenging and promising task for natural language processing and artificial intelligence. By using discrete representations, AnyGPT can seamlessly integrate new modalities into existing language models, and leverage the powerful text-processing abilities of the language models to produce coherent and diverse responses. AnyGPT also provides a unified framework for multimodal understanding and generation, which can be applied to various domains and scenarios, such as education, entertainment, and health care. AnyGPT is a significant step towards building general-purpose multimodal systems that can perceive and exchange information through diverse channels.

Deutsche Telekom Presents App-Free AI Phone at MWC 2024

Imagine a smartphone that can do everything you want without downloading any apps. That’s the vision of Deutsche Telekom, who showcased a revolutionary AI phone concept at the Mobile World Congress 2024 in Barcelona. The AI phone is powered by a digital assistant that can understand your goals and take care of the details, like a personal concierge. Whether you want to plan a trip, shop online, create a video or edit a photo, the AI phone can do it all with just your voice or text input. Deutsche Telekom collaborated with Qualcomm Technologies, Inc. and BrainAI to create this app-free interface, which uses artificial intelligence to generate the next interface contextually. The AI phone is available in two versions: one that uses the cloud for AI processing, and one that uses the Snapdragon® 8 Gen 3 Reference Design for on-device AI processing. Deutsche Telekom invites you to experience the future of AI in mobile technology at its booth

Scale AI to help Pentagon test and evaluate large language models for military applications

Scale AI, a San Francisco-based company, has been awarded a one-year contract by the Pentagon’s Chief Digital and Artificial Intelligence Office (CDAO) to develop a framework for testing and evaluating large language models (LLMs) that can generate text, code, images and other media. LLMs are emerging technologies that can support and potentially disrupt military planning and decision-making, but they also pose unknown and serious challenges. Scale AI will create datasets, metrics and model cards to measure the performance, accuracy and relevance of LLMs for various military use cases, such as organizing after action reports. The company will also provide real-time feedback for warfighters and help the CDAO mature its policies and standards for deploying generative AI safely and responsibly.

Windows Photos app gets AI-powered eraser tool for Windows 10 and 11 users

Windows users can now easily remove unwanted objects from their photos with the new Generative Erase feature in the Photos app. The feature uses generative AI to fill in the gaps left by the erased elements, such as leashes, photobombers, or backgrounds. Microsoft announced that the feature is available for Windows Insiders in all channels, including Windows 10 and Windows 11 for Arm64 devices. The company did not specify how it will mark the edited photos to distinguish them from the original ones.

Google One AI Premium plan introduces Gemini in Gmail, Docs, and more

Google One AI Premium plan members can now access Gemini, a new experience that uses Ultra 1.0, Google’s largest and most capable AI model, within Gmail, Docs, Slides, Sheets and Meet. Gemini can help users get more creative and productive by generating content, suggestions, and insights based on their inputs and preferences.

Microsoft and Intel strike a custom chip deal worth billions

Microsoft will design its own chips for various purposes, and Intel will produce them using its 18A process. The deal is part of Intel’s strategy to regain its chipmaking leadership by offering foundry services to other companies. The partnership is a major win for Intel, as Microsoft is one of the biggest players in the tech industry and a leader in AI innovation.

Meta’s Aria glasses dataset enables new AI research on natural conversations

Meta, the company formerly known as Facebook, has released a dataset of natural conversations recorded using its Aria smart glasses. The dataset includes audio, video, and sensor data from two-sided dialogues, and can support research in areas such as speech recognition, activity detection, and speaker identification. The dataset is part of Meta’s vision to create more immersive and realistic social interactions using AI.

Adobe Introduces AI Assistant for PDFs in Reader and Acrobat

Adobe has launched a new feature called AI Assistant in beta, which uses generative AI to help users extract summaries, insights, and formatted content from PDFs and other documents. AI Assistant is built on the same AI and machine learning models behind Acrobat Liquid Mode, which enables responsive reading experiences for PDFs on mobile devices. Assistant is part of Adobe’s vision to leverage generative AI to transform digital document experiences, with more capabilities planned for the future, such as AI-powered authoring, editing, and collaboration

Neuralink’s First Human Patient Can Move Mouse With Mind, Musk Says

Elon Musk announced that the first person implanted with a Neuralink brain chip has recovered and can control a computer mouse with thought alone. The patient is part of a clinical trial to test Neuralink’s ability to help people with paralysis regain lost functions by connecting their brains to computers. Neuralink aims to eventually use its technology to enhance memory, intelligence, and other abilities in healthy humans, but faces many technical and regulatory challenges.

Be better with AI

In this section, we will provide you with comprehensive tutorials, practical tips, ingenious tricks, and insightful strategies for effectively employing a diverse range of AI tools.

Consistent characters with Remix AI

Download Remix, a free app for image generation.

Use DynaVision XL, an AI tool for creating stylized 3D characters, and enter this prompt:

[subject] | GTA V portrait detailed illustration | [color] tones | [location] background | video game loading screenSave the generated image and try different prompts to change the pose, color, or background of your character.

Use FaceMix, an AI tool on Remix for swapping faces, and apply it to your previous image to adjust the facial features or blend it with another face.

Enjoy your unique and consistent character created with AI!

Thanks to TechHalla for inspiration

Tools

🧠 GigaBrain - Unlock hidden insights from Reddit discussions (link)

🤖 GovernGPT - Accelerate asset management and streamline investor updates (link)

🍈 Melon - Your AI collaborator for enhanced thinking (link)

🎶 lyrical labs - AI-powered tools to ignite your songwriting (link)

🔍 Zenfetch Personal AI - Transform your saved web content into a knowledge base (link)

We hope you enjoy this newsletter!

Please feel free to share it with your friends and colleagues and follow me on socials.