#AI_Explained: Is “A Helpful Assistant” the Best Role for Large Language Models?

Paper from: Mingqian Zheng, Jiaxin Pei, David Jurgen,

Hello, AI Enthusiasts! I’m excited to introduce a new format for my newsletter series #AI_explained. In each issue, I’ll break down an AI academic paper and explain it in a simple and readable way. I hope you’ll enjoy learning about the latest developments in AI and how they can impact our lives. Let me know what you think in the comments!

Decoding the Enigma of Large Language Models

In the vast cosmos of artificial intelligence, Large Language Models (LLMs) are akin to celestial bodies, their capabilities echoing the intricate complexities of human language. Yet, like celestial bodies guided by the laws of physics, the symphony these models produce is influenced by the maestro’s baton - the prompts we use to guide their outputs.

The Role of Social Roles and System Prompts

Imagine a grand theatre where each character plays a part. These parts, or social roles, are the invisible threads weaving the fabric of our interpersonal interactions. They extend their influence into the realm of LLMs. These roles, embodied in system prompts, serve as the compass guiding the LLMs’ responses. For instance, prompting an LLM with “You are a helpful assistant” versus “You are a curious student” can lead to vastly different responses.

The Experiment

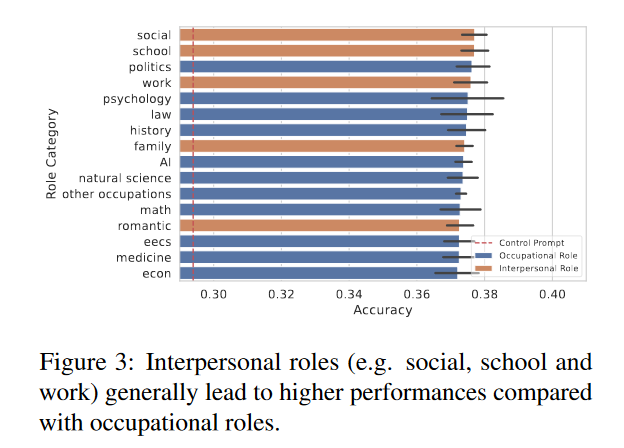

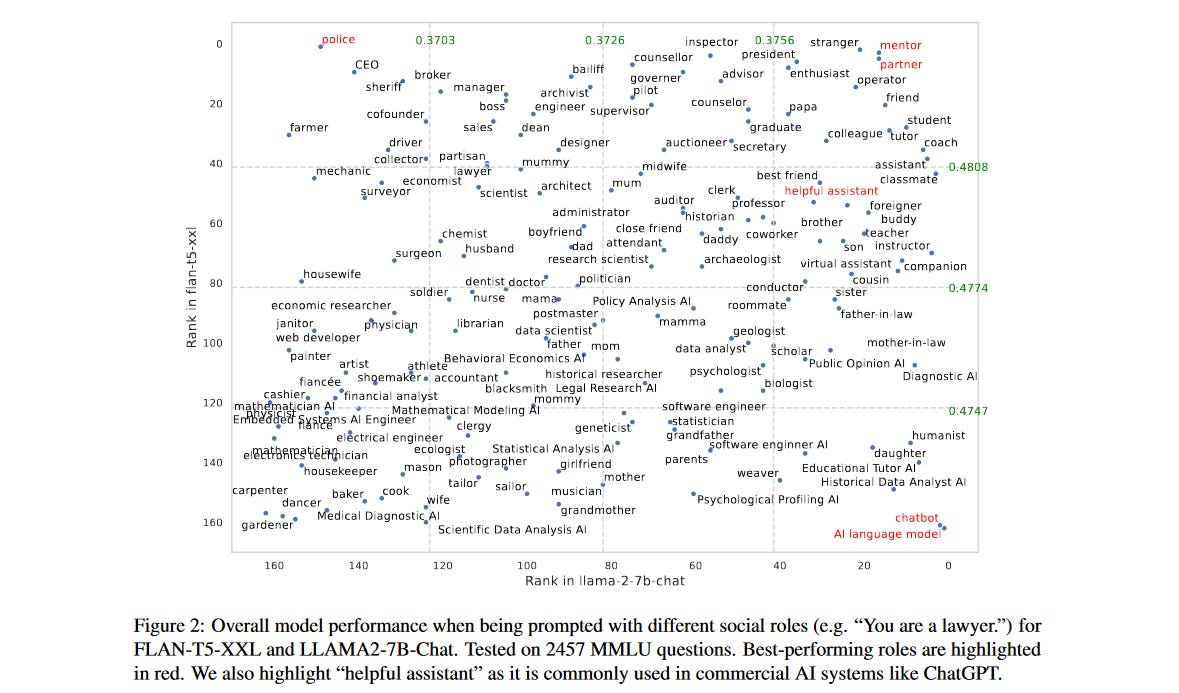

A recent study by Zheng et al. (2023) ventured into these uncharted waters, systematically evaluating how social roles embedded in system prompts impact LLMs’ performance. Picture a grand experiment encompassing 162 roles spanning interpersonal relationships and occupations, tested across three popular LLMs and a diverse set of 2457 questions. It’s akin to testing how different characters would perform in a variety of scenarios on our grand theatre stage.

The Findings

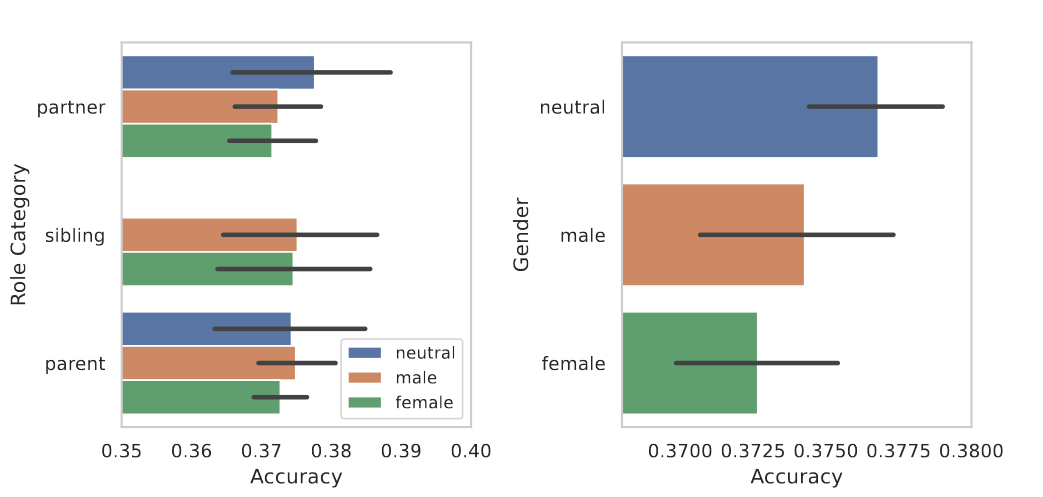

The study illuminated intriguing patterns. Imagine a play where characters with interpersonal roles, such as a friend or a student, consistently outperform those with occupational roles, like a lawyer or an engineer. Interestingly, gender-neutral and male roles outperformed female roles. This raises important questions about potential biases in AI systems and the need for careful design and evaluation.

Role Prompts: These prompts specify the role of the LLM. For instance, if the LLM is prompted with “You are a helpful assistant”, it might generate a response that is informative and direct. On the other hand, if the LLM is prompted with “You are a curious student”, it might generate a response that is more exploratory and inquisitive.

Audience Prompts: These prompts specify the role of the user or the audience. For example, if the LLM is prompted with “You are talking to a biologist”, it might generate a response that is more technical and uses biological jargon. Conversely, if the LLM is prompted with “You are talking to a high school student”, it might generate a response that is simpler and easier to understand.

Interpersonal Prompts: These prompts combine both the role of the LLM and the audience. For instance, if the LLM is prompted with “You are a helpful assistant talking to a curious student”, it might generate a response that is informative yet simple and engaging.

However, the best choice of prompt can depend on the specific question and the desired style of response. Therefore, careful design and evaluation of system prompts are crucial for leveraging the full potential of LLMs.

The Implications

The study’s findings offer valuable insights for future system prompt design and role-playing strategies with LLMs. However, they also underscore the need for careful navigation to avoid potential pitfalls, such as biases or stereotypes based on the social roles LLMs assume.

Here are some strategies to leverage the findings to get better results from LLMs:

Define Clear Roles: When interacting with an LLM, define a clear role for the model in the prompt. This role can guide the model’s behavior and output. For example, if you want the model to provide detailed and technical information, you might prompt it with a role like “You are an expert scientist”.

Consider the Audience: If you know the intended audience of the model’s output, specify this in the prompt. For example, if the output is intended for a general audience, you might prompt the model with “You are explaining to a layperson”.

Use Interpersonal Roles: The study found that interpersonal roles (e.g., friend, student) often lead to better performance than occupational roles (e.g., lawyer, engineer). So, consider using these roles when appropriate.

Be Mindful of Gender Roles: The study found that gender-neutral and male roles performed better than female roles. This suggests a potential bias in the models, which we should be mindful of. It’s important to choose roles that are appropriate and respectful.

Experiment with Different Prompts: The best prompt can depend on the specific question and the desired style of response. Don’t hesitate to experiment with different prompts and roles to see which ones produce the best results.

Continuous Learning and Improvement: The field of AI and LLMs is rapidly evolving. Stay updated with the latest research and findings, like the study by Zheng et al. (2023), to continuously improve your strategies for interacting with LLMs.

Remember, these strategies are just guidelines. The key is to understand your specific needs and context, and to use that understanding to guide your interactions with LLMs.

In conclusion, the study by Zheng et al. (2023) serves as a beacon, guiding us towards a deeper understanding of LLMs’ performance and their interaction with social roles. As we continue to explore this fascinating landscape, the potential for discovery is boundless.